When I went on a nostalgic streaming binge while I had some extra time during my company’s holiday recess between Christmas and New Year’s Day, I got asked a lot of times whether I still had recordings of my streams from over a decade ago when I used to stream nearly full-time hours. The answer, to some people’s surprise, is actually yes—I do indeed have VODs saved of pretty much every stream, as well as original source files of every video I’ve published.

A handful of people then followed up by asking what kind of backup and storage architecture I have that allows me to so reliably retain all this data, especially old data from before there were large advancements in storage solutions. My backup strategy has evolved over the years, starting from just keeping one copy of everything on my laptop and hoping that it doesn’t die, all the way to what I have implemented today.

One of the simplest ways I can think of to describe my current setup is that everything is organized into tiers. Tier 1 is where I actively use files, tier 2 consists of my primary storage locations, tier 3 has my backup storage solutions, and tier 4 is my secondary backup options.

| Tier 1 | Tier 2 | Tier 3 | Tier 4 |

| Local internal hard drives | Synology DS1821+ | WD Elements external hard drive | YouTube |

| Google Drive | Amazon S3 Glacier Deep Archive | Adobe Creative Cloud | |

| Amazon Photos | |||

| Microsoft OneDrive |

As is probably the case for most people, my first line of storage is just the internal drives in my computer. My boot drive is a Toshiba OCZ RD400 PCIe NVMe M.2 128 GB SSD, which holds my operating system and some software (its capacity is that small because I’ve been using the same one for 8 years now, and a 128 GB PCIe NVMe drive back then was pretty good). My SATA drive was originally a 1 TB HDD, but a few years ago, I upgraded to a Western Digital Blue 4 TB SSD and a Samsung 870 EVO 4 TB SSD. I use one as my primary drive and one as a storage drive.

On one of the 4 TB SSDs I use as my primary drive, I have a folder that is synced with Google Drive using Backup and Sync from Google. I used to use Google Drive a lot more, but over the past handful of years, I’ve toned down my usage of third-party service providers in general because I’ve become less trusting of them due to privacy and control concerns (e.g., I don’t want a company to be able to track everything I do, and I don’t want them to have the sole and absolute discretion to terminate my account and lock me out of my data without my input).

Everything in the Tier 1 column, as well as any other data I have that is not in Tier 1, is all in Tier 2, my Synology DS1821+ network-attached storage. This is basically my master vault of data, and close to everything I have ever created in my life exists on this NAS. It’s constantly humming, taking local backup copies of pretty much everything that exists in all my cloud service provider accounts, as well as for some of my friends and clients.

It is loaded with eight Seagate Exos X16 16 TB HDDs and two Samsung 980 Pro PCIe NVMe 2 TB SSDs. The HDDs are organized as a Synology Hybrid RAID (SHR) with two-drive fault tolerance, and the SSD cache is organized as RAID 1 with one-to-one data protection redundancy. The overall capacity for the storage pool is 87.3 TB.

It’s upgraded with a Synology D4ECSO-2666-16G 16 GB memory module, which is installed alongside the original 4 GB memory stick that came with the NAS.

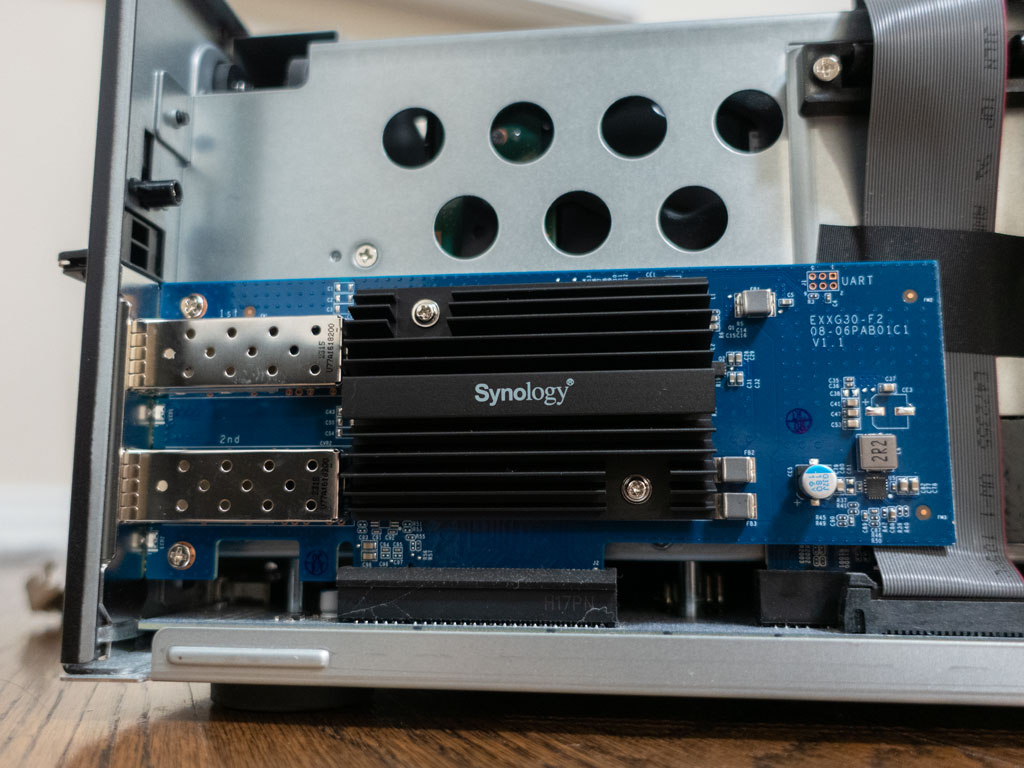

It’s also upgraded with a Synology E25G30-F2 dual-port 25 GbE SFP28 to PCIe 3.0 adapter card… which was not actually the model I was intending to get, but I did not notice until I was done installing the card after it had arrived and was sitting on my desk for a few months. (If you want to avoid the same mistake as me, there might be a chance that the card you’re actually looking for is the Synology E10G30-T2.)

If you’re familiar with tech specs, you may be looking at this and wondering why I have something so powerful, and you’re not wrong—for my purposes, this is comparable to defending your house with a tank instead of just a rifle. Ultimately, it comes down to the fact that I do actually end up using a lot of the features of the NAS and I run enough containers in DiskStation Manager (DSM) to the point that it ends up sort of just being a miniature computer that is working on something 24/7. The second reason is because I like to do things right the first time around, so I wanted to build something that was robust and scalable in preparation for a situation where I would need to make upgrades.

Everything in Tier 2 is then backed up using at least one method in Tier 3. I have a WD Elements desktop external hard drive that is plugged into my NAS via USB and automatically backs up certain files on a routine basis; after sync, this hard drive is kept in a separate physical location as my NAS. I also have a lot of the data uploaded on Amazon Simple Storage Service (S3), a cloud object storage service, under the Glacier Deep Archive storage classification.

The point of Tier 3 is to have redundancy of my data off-site in case a severe natural disaster destroys my residence and everything in it, including my computer and NAS. My external hard drive’s file tree is set up so it is as close to a click-and-drag as possible to a new NAS. Amazon S3 Glacier Deep Archive comes with its own set of issues, such as massive access and egress fees if I ever actually need to retrieve the data, but the storage is basically as cheap as you’ll ever find for cloud storage nowadays, at about a US dollar per terabyte.

Finally, some stuff is also backed up in Tier 4, which consist entirely of free or no-charge third-party services. If I have a video file I want to keep safe, I will upload it to YouTube for free and set it as private or unlisted. A lot of the files I work with in Adobe Creative Cloud and Microsoft 365 are automatically synced to their respective servers using whatever storage space comes with my subscription fee for a software license. I upload most of my photos in raw format to Amazon Photos, where I have unlimited storage thanks to my existing Amazon Prime subscription.

The point of Tier 4 is to protect my data if there is some kind of unprecedented, multi-scope destruction of data that somehow hits my computer, NAS, backup external hard drive, and even Amazon Web Services‘ servers at the same time. In that case, I can hope that some of my videos on Google’s servers and some of my files on Adobe’s and Microsoft’s servers survived, but realistically, I don’t think I will ever have a need to resort to recovering data from Tier 4, and if it ever gets to that point, I think there are going to be much more severe problems with the world to deal with.

Before I end this blog post, I do want to disclaim that I am not an IT professional and you should not blindly copy what I’ve put in this blog post—this is intended to be anecdotal so you can learn more about me, and is not to be construed as a guide on how to build the best backup architecture. Furthermore, I have bought some pretty pricey pieces of hardware in exchange for some of the convenience of the products, and there are a lot of methods out there where you can achieve what I did for lower cost. If you want to take some steps to enhance your data protection, there are a lot of great resources available online and I encourage you to conduct your own research.